2. Land Cover Validation with LUCAS dataset#

This is an example of a land cover product validation using LUCAS points. The process is using the class Validator to perform the main validation steps.

2.1. Install#

2.1.1. Note for Google Colab#

[ ]:

!git clone https://gitlab.com/geoharmonizer_inea/st_lucas/st_lucas-python-package.git

!(cd st_lucas-python-package/; git pull)

import sys

sys.path.insert(0, './st_lucas-python-package/docs/notebooks/')

2.1.2. Install requirements#

[ ]:

!pip3 install geopandas pyyaml==6.0 ipyleaflet

print("INSTALLATION COMPLETED")

Now we have to restart runtime: Runtime -> Restart runtime (on Google Colab) or Kernel -> Restart (on JupyterLab).

[1]:

import os

import yaml

from osgeo import gdal

from osgeo import gdalconst

import geopandas as gpd

import numpy

import urllib

import matplotlib.pyplot as plt

%matplotlib inline

from validator import Validator

2.2. Land Cover validation#

2.2.1. Configure validation#

Check contents of the config.yaml file.

[2]:

# configuration with sample data

config_file = "sample_land_cover/config.yaml" # On Google Colab: "./st_lucas-python-package/docs/notebooks/sample_land_cover/config.yaml"

with open(config_file, 'r') as file:

file_contents = file.read()

print(file_contents)

project:

name: 'Geoharmonizer Land Cover validation'

abbrev: 'cz_lc_18'

run_id: '20210907'

# land cover & reference definitions

input:

# raster map

path: ./sample_land_cover

in_ras: cz_land_cover_osm_2018.tif

ndv: 0

legend: legend.yaml

# vector reference

in_vec: cz_lucas_points_l1_2018.shp

ref_att: 'label_l1'

# validation report settings

report:

path: ./sample_land_cover

dir_name: 'lc_2018_validation'

# validation points for GIS exploration

validation_points:

file_name: 'validation_points'

ogr_format: 'ESRI Shapefile'

epsg: 3035

2.2.2. Initialize the validator#

Initilize the validator by passing the config file or a Python dictionary with the same structure

[3]:

validation = Validator(config_file)

Validation project initialized!

Inputs:

cz_land_cover_osm_2018.tif

cz_lucas_points_l1_2018.shp

2.2.3. Check validity of the inputs#

[4]:

# Check if you can read the geodata

inputs_valid = validation.check_inputs()

print('Validation data ready: {}'.format(inputs_valid))

Validation data ready: True

[5]:

# Check contents of the raster and vector geodata

with open(config_file) as file:

cfg = yaml.load(file, Loader=yaml.FullLoader)

# Vector data

vector_fn = os.path.join(cfg['input']['path'], cfg['input']['in_vec'])

gdf = gpd.read_file(vector_fn)

gdf.head()

[5]:

| point_id | survey_dat | gps_altitu | gps_lat | gps_long | nuts0 | obs_dist | obs_type | lc1 | lc1_perc | label_l1 | geometry | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 48262936 | 2018-05-10 | 309 | 49.321800 | 16.956880 | CZ | 6.0 | 1 | C33 | 5 | NaN | POINT (4826005.575 2935998.024) |

| 1 | 47162980 | 2018-08-02 | 409 | 49.799533 | 15.492019 | CZ | 22.0 | 1 | B75 | 4 | 3.0 | POINT (4716021.636 2979995.779) |

| 2 | 46183058 | 2018-07-19 | 242 | 50.557290 | 14.193572 | CZ | 0.0 | 1 | B13 | 3 | 2.0 | POINT (4617999.865 3057999.905) |

| 3 | 45262962 | 2018-07-17 | 545 | 49.734924 | 12.844097 | CZ | 0.0 | 1 | B35 | 4 | 2.0 | POINT (4526000.963 2961999.910) |

| 4 | 47463004 | 2018-07-24 | 251 | 49.993666 | 15.933803 | CZ | 0.0 | 1 | B11 | 4 | 2.0 | POINT (4745999.810 3004000.403) |

[6]:

# Check the legend

legend_file = "sample_land_cover/legend.yaml" # On Google Colab: "./st_lucas-python-package/docs/notebooks/sample_land_cover/legend.yaml"

with open(legend_file, "r") as file:

legend = file.read()

print(legend)

legend:

1: Artificial

2: Cropland

3: Perenial

4: Forest

5: Shrubland

6: Grassland

7: Barren

8: Wetlands

9: Water

10: Glaciers

[7]:

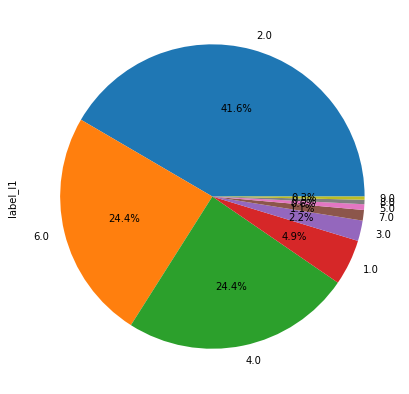

# view distribution of the classes

attribute = cfg['input']['ref_att']

gdf[attribute].value_counts().plot.pie(figsize=(7, 7), autopct='%1.1f%%')

[7]:

<AxesSubplot:ylabel='label_l1'>

[8]:

# Show a map of the data

from ipyleaflet import Map, GeoData, basemaps, LayersControl

gdf_4326 = gdf.to_crs("EPSG:4326")

center = gdf_4326.dissolve().centroid

m = Map(center=(float(center.y), float(center.x)), zoom = 7, basemap= basemaps.OpenStreetMap.Mapnik)

lucas_gd = GeoData(geo_dataframe = gdf_4326,

style={'color': 'black', 'fillColor': '#3366cc', 'opacity':0.05, 'weight':1.9, 'dashArray':'2', 'fillOpacity':0.6},

point_style={'radius': 2, 'color': 'red', 'fillOpacity': 0.8, 'fillColor': 'blue', 'weight': 3},

name='LUCAS points')

m.add_layer(lucas_gd)

m.add_control(LayersControl())

m

/tmp/ipykernel_17328/1624841052.py:6: UserWarning: Geometry is in a geographic CRS. Results from 'centroid' are likely incorrect. Use 'GeoSeries.to_crs()' to re-project geometries to a projected CRS before this operation.

center = gdf_4326.dissolve().centroid

2.2.4. Run land cover map and reference overlay#

[9]:

validation.overlay()

Processed: 100% | 4930 reference points.

[9]:

0

2.2.5. Report the validation results#

[10]:

# short report

validation.short_report()

Validation indicators:

---

Overall map accuracy is 84.16 %

No. passed: 3426

No. failed: 645

No. points used in validation: 4071

[10]:

0

[11]:

# full report

validation.report()

Machine learning validation indicators (per class):

---

precision recall f1-score support

1 0.44 0.84 0.58 185

2 0.86 0.97 0.91 1801

3 0.62 0.13 0.22 61

4 0.93 0.90 0.92 989

5 0.15 0.18 0.16 17

6 0.91 0.63 0.75 952

7 0.62 0.11 0.19 46

8 0.25 0.27 0.26 11

9 0.30 0.33 0.32 9

accuracy 0.84 4071

macro avg 0.56 0.49 0.48 4071

weighted avg 0.86 0.84 0.84 4071

Classical LC validation indicators:

---

overall_accuracy : 0.8416

producers_accuracy : 0.8583

users_accuracy : 0.8416

kappa : 0.7670

[11]:

0

[12]:

# Save the validation report to a text file for a future use

validation.save_report()

Saving validation report:

---

cz_lc_18_validation_report.txt

[12]:

0

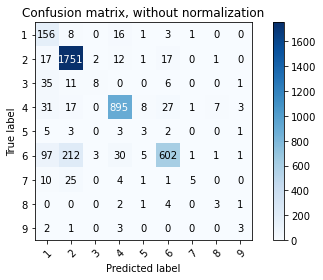

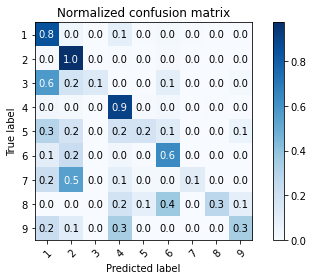

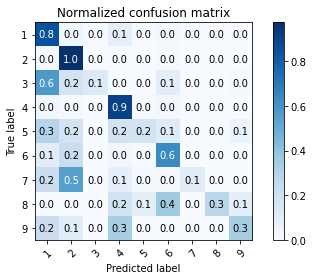

2.2.6. Plot confusion matrix#

[13]:

validation.show_confusion_matrix()

[13]:

0

[14]:

# Check the legend again

print(legend)

legend:

1: Artificial

2: Cropland

3: Perenial

4: Forest

5: Shrubland

6: Grassland

7: Barren

8: Wetlands

9: Water

10: Glaciers

[15]:

# Save the confusion matrix

validation.save_normalized_confusion_matrix()

[15]:

0

[16]:

# You can also save the plots into the validation directory for later use

validation.save_confusion_matrix()

validation.save_normalized_confusion_matrix()

[16]:

0

2.2.7. Save the validation overlay geodata to a vector#

You can

[17]:

validation.save_vec()

Saving validation data:

---

Vector data: validation_points.shp created from overlay data

[17]:

0

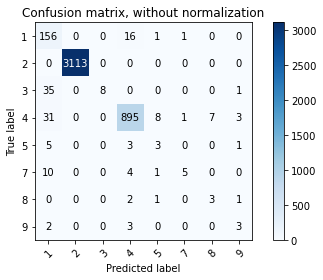

2.2.8. Classes aggregation#

[18]:

config_aggregation = {

'project':

{'name': 'Geoharmonizer Land Cover validation',

'abbrev': 'cz_lc_18',

'run_id': '20210907'

},

'input':

{'path': './sample_land_cover',

'in_ras': 'cz_land_cover_osm_2018.tif',

'ndv': 0,

'legend': 'legend.yaml',

'in_vec': 'cz_lucas_points_l1_2018.shp',

'ref_att': 'label_l1'

},

'report':

{'path': './sample_land_cover',

'dir_name': 'lc_2018_validation_aggregation'

},

'validation_points':

{'file_name': 'validation_points',

'ogr_format': 'ESRI Shapefile',

'epsg': 3035

}

}

[19]:

validation_lc_aggregated = Validator(config_aggregation)

Validation project initialized!

Inputs:

cz_land_cover_osm_2018.tif

cz_lucas_points_l1_2018.shp

[20]:

# 2: agriculture (arable land & grassland)

aggregartion = {

2: [2, 6]

}

[21]:

validation_lc_aggregated.overlay(aggregartion)

Processed: 100% | 4930 reference points.

[21]:

0

[22]:

validation_lc_aggregated.report()

Machine learning validation indicators (per class):

---

precision recall f1-score support

1 0.65 0.90 0.76 174

2 1.00 1.00 1.00 3113

3 1.00 0.18 0.31 44

4 0.97 0.95 0.96 945

5 0.21 0.25 0.23 12

7 0.71 0.25 0.37 20

8 0.30 0.43 0.35 7

9 0.33 0.38 0.35 8

accuracy 0.97 4323

macro avg 0.65 0.54 0.54 4323

weighted avg 0.97 0.97 0.97 4323

Classical LC validation indicators:

---

overall_accuracy : 0.9683

producers_accuracy : 0.9735

users_accuracy : 0.9683

kappa : 0.9267

[22]:

0

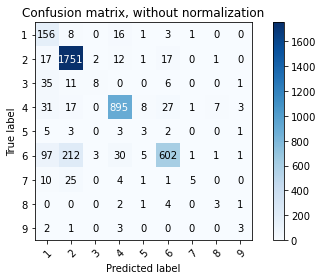

[23]:

validation_lc_aggregated.show_confusion_matrix()

[23]:

0